Preface

For many, it is a strange thing to see people celebrating diminishing returns when adding exponential amounts of compute and other resources. Then one day the fog clears. What is being celebrated is a predictable rate of improvement, some of which will unlock new capabilities, and when done often enough, for long enough, lead to new frontiers. While none of this is without its challenges or skeptics, understanding scaling laws is fundamental to understanding the investment thesis for current AI approaches, even if the tech seems limited today.

Introduction

Scaling laws have emerged as a cornerstone concept, guiding how models improve with increased resources like compute, data, and parameters. These empirical relationships, first prominently outlined in studies around 2020, explain why larger models tend to perform better and have fueled massive investments in AI infrastructure [1][2]. While they highlight diminishing returns, they also offer a roadmap for steady advancement, making them a celebrated tool in AI development.

What Are Scaling Laws?

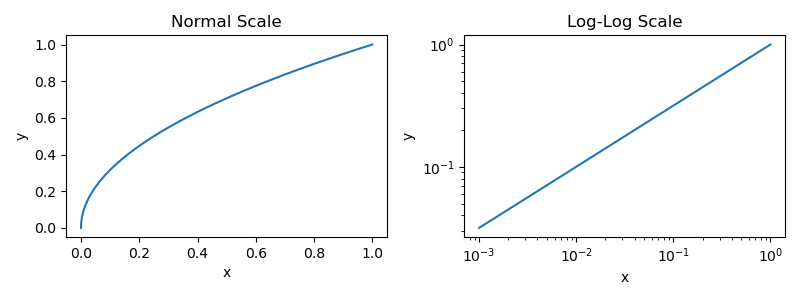

Scaling laws describe the predictable ways in which AI model performance improves as resources scale up. At their core, they capture relationships like test loss (a measure of prediction error) decreasing with compute, often expressed as loss ∝ compute^{-α}, where α is a small exponent around 0.05–0.1 for language models [1][3]. This means error rates drop sublinearly: doubling compute might reduce loss by just 3-4%, requiring exponential resource increases for linear gains [4]. Refined in works like the Chinchilla paper, these laws emphasize balancing parameters and data for optimal efficiency, showing that performance follows power-law curves across vast scales [2][5].

Why Are They Celebrated?

Scaling laws are praised for their predictability, providing a reliable framework in an otherwise uncertain field. Unlike trial-and-error approaches, they allow researchers to forecast performance gains from added resources, enabling confident planning for model development [6][7]. This shifts AI from guesswork to engineering-like precision, with belief that such laws hold across architectures and tasks [1][8].

Even Small Improvements Can Have Big Benefits

Modest reductions in metrics like per-token error can translate to capability leaps due to how tasks are evaluated. In multi-step problems, small accuracy gains compound: a 5% drop in error per token might flip overall success from failure to reliability, as errors multiply across sequences [9][10]. This leads to observed "emergent abilities," where models suddenly excel at new tasks like reasoning or arithmetic [11]. However, there is debate on whether these jumps are true phase transitions or artifacts of nonlinear metrics; some analyses suggest smooth underlying progress when using continuous scoring [12][13].

Even Small Improvements Seem Investment Worthy

The investment appeal stems from the laws' reliability: even sublinear gains accumulate into substantial progress over time, justifying billions in compute as models cross usability thresholds [6][14]. Consensus holds that this predictability reduces R&D risk, with hardware advancements like better GPUs mitigating costs [7]. While some note potential economic limits, the prevailing view is that scaling remains a high-return strategy for frontier AI [16].

Limitations and Open Questions

Despite their strengths, scaling laws face well-acknowledged limitations, including escalating demands for data, compute, and energy that may become unsustainable [17][18]. Data scarcity is a consensus bottleneck, as high-quality training data is finite, potentially capping progress unless mitigated by synthetic alternatives [19][20]. The next-token prediction paradigm itself has inherent limits, struggling with long-term planning or true understanding beyond patterns [18]. Environmentally, the energy consumption of large-scale training raises agreed-upon concerns about carbon footprints [17]. There is debate on whether these laws will hold indefinitely or break at extreme scales, with some evidence of slowing improvements challenging earlier predictions [21][22]. Open questions include the sources of future breakthroughs—whether from continued scaling or new architectures—and how to address generalization gaps in real-world applications [19].

What This Means for the Future

Looking ahead, scaling laws suggest continued progress but with escalating challenges. As compute demands grow for marginal gains, we may hit practical walls in energy, data availability, or economics, prompting a shift toward hybrid approaches like algorithmic efficiencies or multimodal training [17][18]. Speculatively, if laws hold, models could approach human-level versatility, but breakthroughs in alternatives (e.g., neuromorphic computing) might disrupt [19]. Debates intensify here: optimists predict sustained scaling via innovation, while skeptics foresee plateaus forcing paradigm shifts [20][21].

Conclusion

AI scaling laws encapsulate the tension between diminishing returns and reliable advancement, transforming resource-intensive pursuits into strategic investments. By offering a clear path forward, they underscore why AI's future, though resource-hungry, remains brightly promising—provided we navigate emerging limits wisely.

References

[1] Scaling Laws for Neural Language Models. https://arxiv.org/abs/2001.08361

[2] Training Compute-Optimal Large Language Models. https://arxiv.org/abs/2203.15556

[3] Explaining Neural Scaling Laws. https://arxiv.org/html/2102.06701v2

[4] Neural scaling law. https://en.wikipedia.org/wiki/Neural_scaling_law

[5] Scaling Laws for LLMs: From GPT-3 to o3. https://cameronrwolfe.substack.com/p/llm-scaling-laws

[6] How Scaling Laws Drive Smarter, More Powerful AI. https://blogs.nvidia.com/blog/ai-scaling-laws/.

[7] The Scaling Paradox. https://www.forethought.org/research/the-scaling-paradox

[8] On AI Scaling. https://www.lesswrong.com/posts/WHrig3emEAzMNDkyy/on-ai-scaling

[9] Common arguments regarding emergent abilities. https://www.jasonwei.net/blog/common-arguments-regarding-emergent-abilities

[10] Emergent Abilities in Large Language Models: An Explainer. https://cset.georgetown.edu/article/emergent-abilities-in-large-language-models-an-explainer/

[11] Emergent Abilities of Large Language Models. https://arxiv.org/abs/2206.07682

[12] Are Emergent Abilities of Large Language Models a Mirage? https://arxiv.org/abs/2304.15004

[13] AI's Ostensible Emergent Abilities Are a Mirage. https://hai.stanford.edu/news/ais-ostensible-emergent-abilities-are-mirage

[14] Scaling Laws in AI: Why Bigger Models Keep Winning. https://ai.plainenglish.io/scaling-laws-in-ai-why-bigger-models-keep-winning-60d6ecc0f360

[16] Current AI scaling laws are showing diminishing returns... https://techcrunch.com/2024/11/20/ai-scaling-laws-are-showing-diminishing-returns-forcing-ai-labs-to-change-course/

[17] The Limits of AI Scaling Laws. https://medium.com/@ddgutierrez/the-limits-of-ai-scaling-laws-c8784792e570

[18] Has AI scaling hit a limit? https://foundationcapital.com/has-ai-scaling-hit-a-limit/

[19] Five Key Issues to Watch in AI in 2025. https://cset.georgetown.edu/article/five-key-issues-to-watch-in-ai-in-2025/

[20] A brief history of LLM Scaling Laws and what to expect in 2025. https://www.jonvet.com/blog/llm-scaling-in-2025

[22] What's going on with AI progress and trends? (As of 5/2025). https://www.lesswrong.com/posts/v7LtZx6Qk5e9s7zj3/what-s-going-on-with-ai-progress-and-trends-as-of-5-2025.