Introduction

The recent blog, Who's Driving the Conversation — You or the LLM? highlighted that when an LLM is a companion, the burden is on the LLM to emulate the experience of interacting with a human, while the burden is on the human when using the LLM as a productivity tool. That burden is to articulate intent and objectives in a way that will stimulate desired responses from the LLM, responses that vary from inference to inference and model to model.

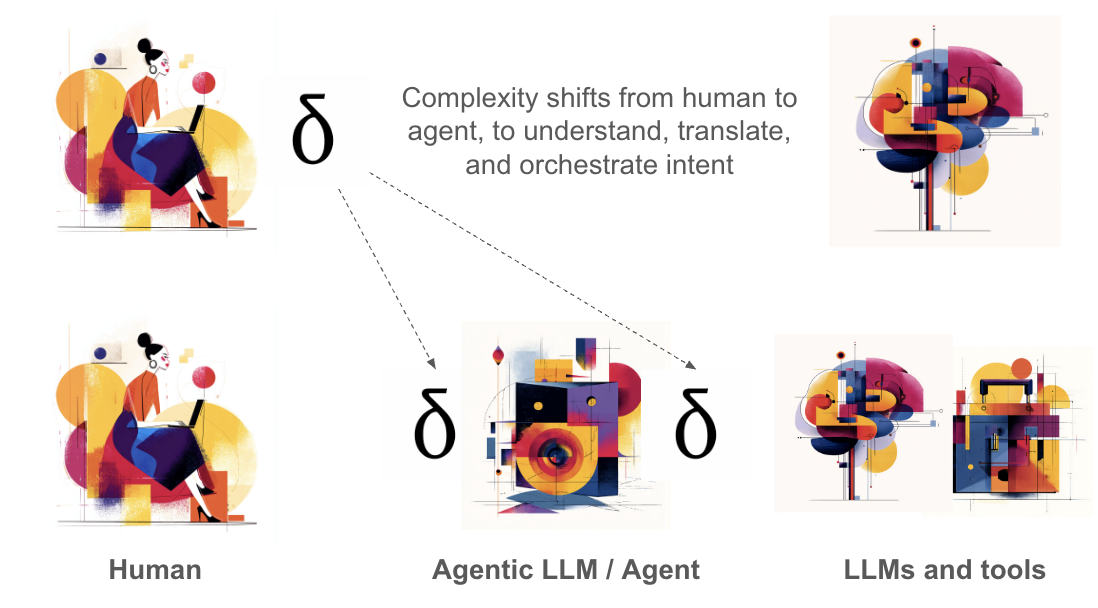

That new complexity in our lives — how do we get LLMs to do what we want them to do — is mitigated by agents. Perhaps not entirely or without errors; however, whether intentional or not, they shift complexity from the Human input to the LLM to the Agent's translation of the human input. In assessing the impact of complexity on velocity, we must always be open to questions about where the complexity is now and how it can be relocated/reduced to improve velocity.

Ask a simple question: what is more likely, fastest, most efficient, and most repeatable:

A human designing and executing prompts and processes An agent translating varied expressions of intent to plans and processes It is not a slam dunk that the Agent is always the better choice, but it may be the best choice an increasing number of times, and it may also construct ad hoc processes.

The cognitive translators

Humans and large language models (LLMs) don't speak the same native language. We communicate through context, shorthand, and shared understanding; for productivity tasks, LLMs rely on explicit structure, complete context, and literal instruction.

That mismatch is one of the reasons AI agents have emerged as the crucial middleware layer between people, models, and tools.

Agents act like cognitive translators.

They take vague, natural requests—"Plan me a marketing campaign" or "Help me with next quarter's goals"—and decompose them into explicit, machine-usable prompts: audience analysis, timeline design, budget forecasting.

Instead of the human learning prompt engineering, the Agent does it for them. In practice, these systems wrap an LLM with planning modules, memory, and tool integrations, turning one-shot prompts into multi-step reasoning chains.

In companionship settings, they may use routing or "mixture of experts" designs to keep dialogue natural and consistent. But this doesn't erase complexity—it repositions it.

The interpretive burden moves from the human–prompt interface to the human–agent interface. Agents abstract syntax, but semantics and judgment may still require humans in the loop.

Takeaway

Why is this important? In all technology adoption curves, there is the issue of how quickly a workforce will learn and become proficient with technology to realize productivity gains. By shifting the complexity from humans to agentic LLMs, agents can be a productivity accelerator.

It is not as if life has ever been without agents to reduce complexity—for example, previous human travel agents—it is just that the nature of agents is evolving.