Introduction

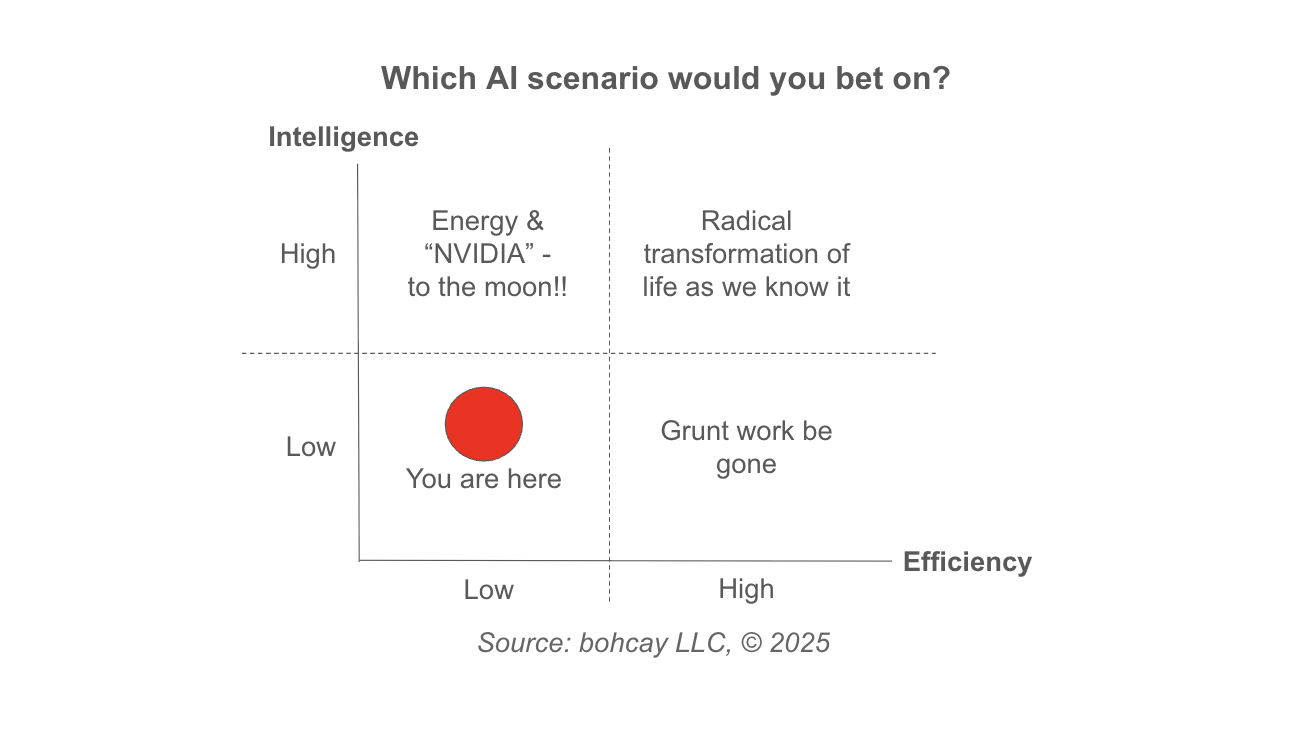

This blog introduces the Intelligence vs Efficiency matrix, which emerged from the Velocity Framework used as context for research and analysis. This 2x2 provides one lens on AI, specifically focusing on intelligence and efficiency. Intelligence is the goal, inefficiency is one aspect of the complexity coefficient (δ) that threatens to slow down AI productive output, if it has not already, and contributes to the pressure on compute and power resources.

As individual investors, industry analysts, business planners, strategists, and consultants, we ask ourselves a simple question - which of these scenarios are most likely, and what other scenarios should be considered. This analysis discusses one set of possible scenarios, albeit based on two of the most important issues - intelligence and efficiency.

History Repeats in Energy and Intelligence

Major technological revolution periodically follows a pattern:

- massive energy input →

- efficiency breakthrough →

- exponential adoption.

Here are some examples:

-

Steam Power (18th century): Early engines were crude and inefficient, converting around 0.5-1% of thermal energy into motion. But once efficiency rose to 2-5% with Watt's improvements, the Industrial Revolution exploded—transforming labor, transport, and trade.

-

Electrification (19th–20th centuries): Initially, electricity was costly and fragile, confined to large cities. As grid efficiency climbed from ~6% in 1900 to over 40% by 1980, it became an invisible backbone of civilization, including rural electrification in the 1950’s.

-

Computing (20th century): The first computers consumed enormous amounts of energyfor minimal calculations. The transistor’s arrival improved energy efficiency per operation by a factor of ~10^9 in fifty years (Koomey’s Law).

-

Automation and Robotics (late 20th century): Industrial automation reached the “grunt work be gone” phase, displacing repetitive labor while unlocking creative and service economies.

AI, in this sense, isn’t breaking the pattern—it’s replaying it at digital speed—standing on the shoulders of the Internet and Cloud computing revolutions.

The Energy Curve of AI

We are now on the upstroke of AI’s steam age—potential but high inefficiency. Training frontier models consume millions of kilowatt-hours, roughly equivalent to powering thousands of homes for a year. Inference is power hungry as well, and always on.

According to recent estimates:

Global data center electricity demand could reach 945 TWh by 2030—equivalent to Japan's annual use (~913 TWh). Model training efficiency (measured in FLOPS per watt) has improved ~5x in the past five years, yet compute demand has outpaced that gain by over 1,000x .

In other words: our token factories are scaling faster than efficiency. This imbalance lands us firmly in the “Energy & NVIDIA – to the moon!!” quadrant.